How A Power BI Performance Expert Can Tame The Challenge of Large Datasets

June 5, 2025

At The Bridge Digital Solutions, we understand that Microsoft Power BI is an incredibly powerful tool for data analysis and visualisation. One of its most important features is Large Datasets, a formula language used for creating custom calculations in Power BI reports.

However, working with large datasets can be challenging—especially for those who are new to Power BI or dealing with complex business logic. Our recent LinkedIn poll showed 25% out of 290 respondents voted ‘Performance on Large Datasets’ as a major challenge in using Power BI.

This is where a Power BI performance expert can add significant value.

Power BI is a powerhouse for business intelligence, letting you connect to diverse data sources, build interactive dashboards, and drive data-informed decisions. But when your data grows in volume, complexity, or velocity, Power BI can start to feel sluggish. As your dataset expands, performance tuning becomes less of a nice-to-have and more of a necessity.

So, what actually causes Power BI to slow down with large datasets? And more importantly—what can you do about it? In this article, we’ll walk through the most common issues, how to diagnose them, and how a Power BI consultant can help you build a scalable, high-performance solution.

Why Large Datasets Strain Power BI

When you load millions of rows into Power BI, you’re asking a lot from your system: CPU, RAM, storage, and the VertiPaq engine all have to work together to deliver fast, interactive results. As dataset size grows, the load on each of these components increases. Here’s why that becomes a problem:

Bloated or Inefficient Data Models

A clean data model is the backbone of a performant Power BI solution. But as datasets scale, inefficiencies in the model become magnified.

Common issues include:

- Importing all columns from source tables—even the unused ones

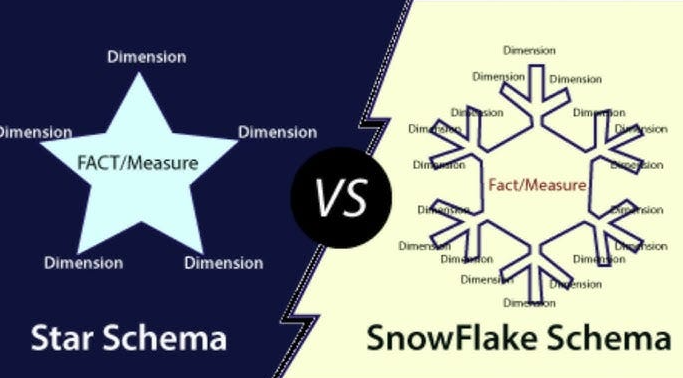

- Using a snowflake schema instead of a star schema. A snowflake schema has many small tables which can slow load times and will need many more relationships. These are what makes this format unsuitable for efficient Power BI use.

- Relying on columns with high cardinality (like unique IDs).

- Having too many relationships or ambiguous joins

Each of these adds complexity and overhead to the Power BI engine, slowing down calculations and rendering.

Expensive DAX Calculations

DAX (Data Analysis Expressions) gives Power BI its calculation power—but it can also slow things down if used incorrectly.

For example:

- Using iterators (SUMX, AVERAGEX, etc.) across large tables

- Repeating the same logic multiple times in one measure

- Poor context transitions using CALCULATE without filters

These issues multiply on large datasets and can add seconds—or even minutes—to your report load times. A Power BI performance expert can certainly help here, and see also our blog on DAX.

Too Many Visuals or Inefficient Reports

Every visual on a Power BI report sends its own query to the engine. Add slicers, filters, and complex calculations, and the system can quickly become overloaded. Note this only happens in direct query mode. This is when no data is stored in the semantic data model itself and the report pulls fresh data straight from the sources on every refresh.

Visuals that show raw data (like tables with many columns and rows) are particularly slow on large datasets. A visually busy report might look impressive, but it’s a performance killer.

Unfiltered or Overloaded Queries

Many teams load raw tables into Power BI and do all the filtering inside the report. That works for small datasets—but on large ones, this approach leads to bloated memory use and slow refresh times.

Best practice is to filter at the source: use Power Query or SQL to limit the size and scope of the data before it hits your data model.

Inefficient Refresh Strategy

Large datasets can take a long time to refresh, especially if you’re refreshing the entire model every hour. Without an incremental refresh strategy, you’re loading millions of rows every time—even if only 1% of the data has changed.

How to Optimise Power BI for Large Datasets

The good news is that Power BI can absolutely handle large datasets—as long as you build it with scalability in mind. Here’s where to focus:

Streamline Your Data Model

Start by reviewing your model with a critical eye:

- Remove unused columns and tables: Less data = faster performance.

- Use a star schema: Dimension and fact tables help the engine work more efficiently (see diagram below).

- Simplify relationships: Avoid many-to-many and bi-directional relationships unless truly needed.

- Reduce column cardinality: Replace long text values with integers where possible. This isn’t necessarily a bad thing, as you need some way to uniquely identify individual rows. However, data can be moved out into a fact table. A process called normalisation.

A Power BI performance expert can help you audit your data model and eliminate performance blockers.

Optimise DAX Measures

DAX is a brilliant tool—but it rewards careful thinking. A few tips:

- Use variables (VAR): This avoids recalculating the same logic multiple times.

- Avoid iterators on large tables unless absolutely necessary.

- Be mindful of context: Improper use of CALCULATE, FILTER, and ALL can drag performance down.

- Pre-aggregate when possible: Don’t force DAX to do heavy lifting at runtime if you can prepare the data in advance.

A Power BI performance expert skilled in DAX can often rewrite a handful of measures and dramatically reduce load times.

Use Aggregation Tables

Aggregation tables are summary versions of your larger datasets. You might have a detail-level sales table with 100M rows, but also a summary table by product and month.

Power BI’s aggregation awareness lets it use these smaller tables automatically, switching to the detail table only when needed. The result? Lightning-fast performance.

Implement Incremental Refresh

Power BI Premium and Pro users can take advantage of Incremental Refresh, which only loads new or changed data rather than refreshing the entire model.

This is ideal for transactional datasets like sales, inventory, or logs—where yesterday’s data rarely changes. It slashes refresh time and reduces strain on your data gateway.

Power BI performance expert can also advise on ways to automate your reporting.

Consider DirectQuery or Hybrid Models

In some cases, importing all the data just isn’t viable. That’s where DirectQuery and Hybrid models come in:

- DirectQuery keeps data in the source system, querying it on demand.

- Hybrid models let you import critical data while DirectQuery-ing less-used tables.

These modes trade some functionality for scale—but they’re essential tools for working with truly massive datasets.

Simplify and Prioritise Visuals

Less is more when it comes to visuals:

- Avoid overloading report pages

- Use slicers and filters efficiently

- Aggregate data before visualising

- Use bookmarks or drill-through pages to manage complexity

Each visual you remove (or simplify) improves performance for every user, every time.

How a Power BI Performance Expert Can Help

When you’re wrestling with performance issues, you don’t need to go it alone. A Power BI performance expert brings experience, tools, and a structured approach to fixing what’s broken.

They can help you:

- Audit your data model and redesign it for scale

- Refactor expensive DAX measures

- Design and implement aggregation tables

- Set up incremental refresh strategies

- Switch from Import to DirectQuery or Hybrid mode

- Provide governance and best practices for sustainable growth

Even a few hours with a consultant can save your team dozens of hours spent waiting on slow reports.

Final Thoughts

Power BI is a fantastic tool—but it’s not magic. When you’re working with large datasets, performance requires careful planning, optimised models, and efficient code.

The best news? All of these problems are solvable. Whether it’s cleaning up your data model, optimising your DAX, or rethinking how you structure your reports, there’s always a path forward.

At The Bridge Digital, we specialise in helping businesses get the most out of Power BI—even at scale. If your reports are slow, your refreshes are failing, or your team is frustrated, let’s talk. We can help you tune your setup, scale confidently, and turn your Power BI investment into real-time, high-value insight.

Email us today or fill out the form below and let us help you build a Power BI solution that performs at any size.